AI: Where Are We Headed?

A 360 view of what seems to be going on

Wow! AI will cure cancer! Solve the Climate Crisis! Free us from all boring work!

ARRRGGHH!! AI Will Destroy Us!

Meh! Calm Down Everyone, AI is Not All That.

These are the three categories of stories about AI we’ve seen over all forms of media for the last few years - fueled by a combination of huge marketing efforts by Big Tech, breathless news/opinion reporting, and millions of individuals showing what is possible with a single prompt.

Are any of them right? What’s most right?

Of course, it’s not really possible to know for certain, but it IS worth looking at each argument in a bit of detail, as it gives us some clues as to how to navigate the future in a responsible way.

Let’s take a look at each argument in turn..

The Wow! Argument

Who makes this argument?: Big Tech, AI Startups, and Bullish Investors

Yes, there is a lot of marketing hype around AI, but let’s face it, it’s already doing some remarkable things. If you sit at a desk, many of the things that took weeks to do before can now be done in seconds.

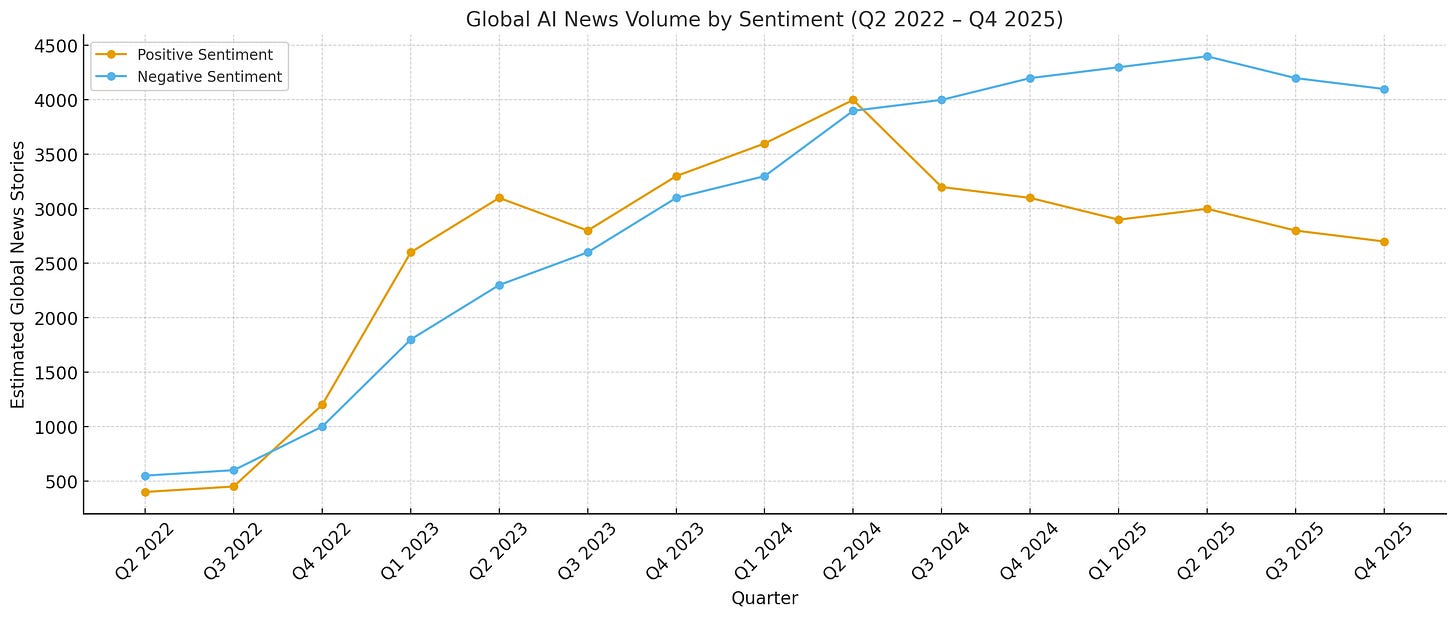

For example, in preparing for this article, I asked ChatGPT to analyze positive and negative news stories about AI from all publicly available outlets globally, and give me a chart that summarizes it, plus analysis of the main drivers for peaks in types of coverage. Here’s the chart it came up with in around 90 seconds.

You are probably not surprised by this (and of course should be dubious as to how accurate it is), but that is because of the new world we are in. We have got very used to the idea of AI being able to do things much faster than we can as humans, and often even better. But the fact that it is no longer novel doesn’t make it any less important.

One off “tricks” that AI can perform based on an individual prompt are one thing, but we are also seeing ways in which it’s transforming our world in a meaningful way.

For example, Google Maps now includes an AI-driven fuel-efficient routing option that analyzes traffic patterns, elevation, and engine type to recommend routes that use less fuel. Since its 2021 rollout, this feature is estimated to have prevented over 2.5 million metric tons of CO₂ emissions — roughly the same as removing 650,000 cars from the road for a year.

Meanwhile, at Khan Academy, the AI-powered teaching assistant Khanmigo is being used by students and teachers across U.S. school districts to deliver personalized guidance and lesson planning. In pilot programs, it’s been shown not just to boost engagement but to significantly improve math proficiency, especially among students who previously struggled. Rather than replacing educators, the AI supports them — freeing classroom time for deeper human connection while ensuring measurable academic gains.

There is every reason to believe that there will be far more amazing advances to come.

The ARRRGGHH! Argument

Who makes this argument? Science Fiction Writers, some academics, some high profile AI researchers, and occasionally Elon Musk

This argument is really a combination of hypotheses, because there are a number of different ways AI could end up being really, really bad for us. Here are four broad categories:

The most worrying of all is an AI-driven armageddon. Nick Bostrom’s alarming book Superintelligence outlines several versions of this, including scenarios where machines exceed human general intelligence, and then use their smarts to build other machines that are an order of magnitude smarter than them, all before we have the time to stop it. Some researchers even believe that apocalypse is an inevitable consequence of developing advanced technology - the reasoning here goes that the more advanced our technology, the more ways we are building to self-destruct, until it becomes inevitable. This is even an argument as to why we don’t see other advanced civilizations. Before they can reach us, they kill themselves off.

The Bulletin of the Atomic Scientists do see AI as a potentially existential risk, which is why they have set the Doomsday clock to it’s closest point ever to midnight, and have specifically cited risks from AI as a reason.

If we avoid this spectacular death, we could just exhaust planetary resources or pollute ourselves to oblivion as we accelerate the AI race.

It’s no secret that today the Arctic is suddenly strategic, trade alliances are fracturing, and climate commitments are quietly being shelved in favor of keeping the lights on. What’s the common thread here? The data centers needed to power the AI revolution require huge amounts of power, water and rare earth minerals. There is a real chance that the very technology we build to innovate our way out of planetary environmental challenges, destroys our planet along the way.

But let’s say we navigate this challenge well - we may still create a world where huge numbers of us humans are no longer useful.

For the first time, we are building technology that has the potential to learn and adapt to new tasks faster than humans can. An AI system that analyzes complex situations, makes judgment calls, and improves through experience isn’t just replacing current jobs—it’s competing for jobs that don’t exist yet.

And of course it’s not just software in isolation. All this talk of AI is causing many of us to take our eye off the ball when it comes to general purpose robotics. The most dramatic effects will likely happen when AI, robotics and automation truly converge, giving us systems that communicate and collaborate with each other, adjust in real time and perform physical work - continuously improving, never getting sick and working 24/7.

And alongside being no longer useful, we may also no longer be interested. We assume that humans have an innate need or desire to be creative and productive. But I think it’s reasonable to question that assumption. What if these desires are born out of a combination of needing to earn money, and needing to make our own entertainment? Many of us already spend hours each day doing nothing other than doomscrolling on our phones. If that content becomes ten times more immersive, will we even want to do anything else?

The “Meh!” Argument

Who makes this argument? Bearish Investors, and a lot of LinkedIn…

This argument is really in the ascendency right now, and it’s a fairly easy one to make based off current data points. The theory here is that both the Wow and ARRRGGHH arguments are wildly overblown. That AI does great party tricks, but not much else of real use, and certainly not at scale. As for destroying us. That was supposed to be nuclear weapons.

Yet we are still here…

The Meh! argument relies on the fact that betting on “it’s not really all that” almost always pays off. Those virtual reality headsets were supposed to be on all of our heads by now. And all of us were going to be buying hundreds of NFTs.

There are some interesting data points that can back up the “Meh” argument. It is absolutely true that many—possibly even most—large scale AI investments have failed to date. Goldman Sachs has published research questioning whether the trillion-dollar AI infrastructure build-out will ever generate returns. McKinsey found that only 11% of companies worldwide are using Gen AI at scale, with operations functions faring even worse.

It’s also true that some companies did layoffs specifically to replace people with AI, only to quietly hire people back months later when AI couldn’t actually do the job. And multiple tech companies have announced “AI-first” strategies that have turned out so far to be “AI-complicated” realities.

It turns out that humans are still pretty useful. We can make judgement calls when the data is incomplete or contradictory. We can navigate ambiguous situations where there’s no clear right answer. We can often understand what a customer actually needs versus what they’re asking for. In other words, we can still be human when there are still many cases where that is needed.

So Where Actually Are We Headed?

As I mentioned at the start, I don’t really know, and neither does anyone, no matter how confident they are in their statements. There are just too many variables at play, and of course, we live in a BANI world.

But, that said, there are some things we can say with some confidence. Right now, in 2025, the Meh! argument does make sense. There is no doubt that AI has been overhyped, we probably are in some form of AI stock market bubble, and humans will still be useful for some time.

But it’s also very likely that we are doing what humans have done now for decades - overestimating the impact of technology in the short term (1-2 years) and underestimating its impact in the medium term (10 or more years). Trillions of dollars are being invested in AI, and sooner or later, those trillions will probably add up into something meaningful.

My personal view is that AI is part of a larger set of technology innovations, including robotics, automation and quantum computing) that will dramatically transform the world and our role in it. It’s likely that we are underestimating both the benefits and the risks, as we rely on outdated mental models to envision them. I’m not particularly comforted by the argument that we have not killed ourselves off yet in the nuclear age. Modern historians often point out that we very nearly did in 1983, and some even claim we are genuinely lucky not to have.

In my upcoming book, I make the argument that we are in an exponential phase of technology development, and the fundamental challenge for us as humans is that biological systems don’t develop exponentially.

Remember, in the billions of years that earth has existed, humans have been dominant only for about 7,000 of them. There is absolutely no guarantee that we will be relevant for much longer.

But, despite all this, I absolutely do not believe we are doomed. Tomorrow, I’ll share my thoughts on what society needs to do to increase the likelihood that humans remain relevant in a world where AI is omnipresent.