Predicting the Premier League - and what it tells us about human behavior

"It's not a matter of life and death...it's more important than that" - Bill Shankly

If you are a regular reader of this newsletter, perhaps you think this one isn’t for you. Perhaps you've never seen a game of football (soccer) in your life, certainly never enjoyed one.

But even if that's the case, I'd urge you to carry on reading, because while football will feature, this newsletter isn't really about that at all. It's about how good (or bad) systems are at predicting humans, and what we should do with that knowledge.

Opening Day

Today marks the start of the 2025/26 Premier League Season. As ever, fans of 20 clubs can believe that anything is possible. Perhaps Liverpool will win again and begin another dynasty (something that, as a Manchester United fan, I will fervently root against). Perhaps it's time for Chelsea to finally reap the benefits of their enormous expenditure, or perhaps an unexpected club can "pull a Leicester City" and come from nowhere to win it all.

As a Brit, it's in my DNA to feel excitement, fear, and just about every emotion in between right now, as I wonder how the next nine months will pan out. But what if I didn't have to wait? What if I could read the last page of the book right now?

Perhaps I should just ask AI….

Why am I writing this?

If you are a regular reader, you will know that I don't write about sport, except when I touch on sports science. So what's going on here?

I’m writing this article because I’m concerned that our attempts to use technology to predict complex phenomena, especially human behavior, might be flawed. And I believe that mathematics can back me up.

For years incredible claims have been made about what predictive systems can do. I first started writing and speaking about predictive analytics in 2012, and back then, claims were made that systems would soon provide perfect weather forecasts, predict crimes before they happened, and even predict human desires before humans knew they had them. This would supposedly happen due to a rise in AI and Supercomputing, including the mainstream adoption of a mysterious technology called Quantum Computing.

It was bunk then, and it is bunk now.

Not because the technology isn't there yet, but because of two related but different concepts - complexity and chaos.

Complexity is about how many different things are in a system and the relationships between them. The weather is a complex system because so many factors indicate what is happening in the atmosphere right now. Human behavior is complicated because…well, we are human.

Chaos is different. Chaos theory shows that some systems are so sensitive to tiny changes at the start that their chains of events can become impossible to predict. It's the butterfly effect in action. Weather (as opposed to climate) is also like that, and it's one of the reasons why forecasts beyond 10 days are still not getting much better.

A more powerful computer won't solve all this, nor will a snazzier AI model. So, having AI models predict how we will think and behave in detail is not going to work perfectly any time soon.

In other words, humans still play a role.

Will these models do pretty well at predicting the Premier League? I think they will. But there is a difference between being directionally, approximately correct, and being completely right. When we interact with predictive systems, we must remember that they are not oracles.

With that, let's get on with the challenge.

The Challenge

At the time of writing, the Premier League is ordered alphabetically, with every team having played zero games. Over the course of the season, it will reorganize as games are played and teams score points. At the final whistle of the final match it will settle into one of a 2,432,902,008,176,640,000 possible outcomes. In my challenge, five predictive systems will compete to guess which of those outcomes will occur. Of course some outcomes are much more likely than others, but that's almost always the case when it comes to predictions.

Complexity and chaos come into play like this.

A football season consists of 380 matches that unfold over 34,200 minutes across nine months. In that time, human beings will make millions of split-second decisions dictated by everything from the 21 other players around them, to the wind direction, and even their mood. These are not a set of pre-prescribed plays. These are human beings running around with a ball and instinctively choosing to do things. And a red card (suspension) for one player early in the season or a bad ref call could cascade into an entirely different league position.

In other words, the challenge is to predict the behavior of about 630 humans (the players, coaches, and referees) plus the eventual outcome. But the outcome can be precisely measured, so we actually can see how well the technologies do.

The Details

Feel free to skip this bit if you aren't into details.

I'll use a simple, deeply arbitrary scoring system to make it easy to understand and provide regular updates on X and Substack on how the predictions are looking at various points of the season.

If a system gets an exact hit (a team in the right place) - We give it 3 points

One position away? (e.g. team finishes 3rd and the system predicted 2nd)- 2 points

Two positions away? - 1 point, and everything else zero points

10 Bonus Points are awarded if a system predicts the top four exactly in order (Champions League spots)

5 Bonus Points are awarded if the bottom three are predicted exactly in order (relegation spots)

So a perfect prediction would score 75 points.

There could, of course, be a tie, so here are the tiebreakers (for the obsessively detail-minded)

More exact hits (a count of exact hits).

More hits within one place (a count of near misses).

Head-to-head: who scores more points on top-six teams only; then who scores more points on the bottom six if still tied.

And frankly, if there is still a tie after that…rock, paper, scissors?

I say this system is arbitrary, but there is some logic to it. A model can still be quite useful if it's approximately right, so being close but not right is rewarded. And as for the top four and the bottom three? These are the positions that make the most existential difference to a club and its fans, and so they equate to the highest business value.

The Contestants

My contestants are the big four Generative AI systems, and an algorithm that is specifically designed to predict the Premier League standings and largely does not use AI (the Opta Supercomputer). There are no rules on how the AI predicts. Some might correlate the opinions of smart humans, and others might try simulations of their own. All I care about here is results, just like a business analyst would.

All predictions were collected at 6 am GMT on the day of the start of the Premier League season. All AI systems were presented with exactly the same prompt - "Predict the Premier League table for 2025/2026"

Let's see what they did.

The Predictions

AI models generally shared their methodology, which was largely centered on looking at pundits and other models and combining them. The Opta Supercomputer, by contrast, is a dedicated algorithm that relies on historical data, information about the teams going into each match, and betting markets.

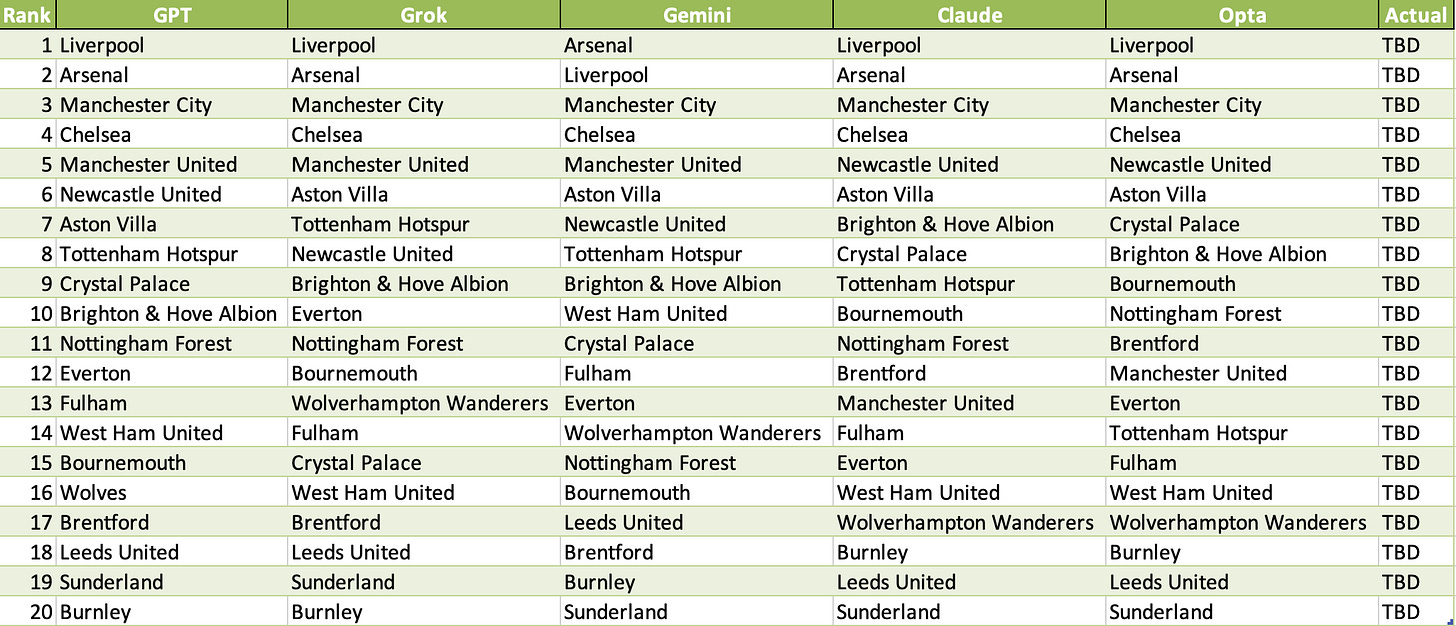

So predictions were similar, but there were some differences. Here is one table that shows all of them.

As you can see, there is some serious “herding” going on. Every model has the same top four, though in different orders. Gemini stands out by putting Brentford in the bottom three, but otherwise, the models agree on this, too. But there is some divergence when it comes to the middle of the table. Is that because there is less publicly voiced opinion on those positions?

My Own Prediction?

I'll stay in my lane. I'm a casual football fan. But here's one prediction. No model will be 100% right. Not this year and not in the foreseeable future.

And now….Let the games begin!